Terrorist groups have a new tool in their toolbox – Artificial Intelligence (AI). It is being used by a rising generation of members of Hizbullah, Hamas, and Yemen's Ansar Allah Movement (Houthis) and their ilk to win new supporters and followers. Pro-ISIS and pro-Al-Qaeda figures involved with these groups' online activity frequently discuss, on their closed encrypted platforms, how to best use AI. As one ISIS supporter recently exhorted, "In order to move forward in the future, we need to learn how to use the new technology."

Children's high susceptibility to AI-generated content opens new opportunities for their exploitation by terrorist groups. Policy should be set in place now to respond to and counter this activity, which is evolving at a breakneck speed. The young extremists being trained on AI are themselves radicalizing others with it, particularly their peers. AI-generated content disseminated online by terrorist organizations provides an easy path to indoctrination.

The Al-Azaim Media Foundation, a media outlet linked to the Islamic State Khorasan Province (ISKP), just released an extensive article in its English-language monthly magazine "Voice of Khurasan" discussing the use of AI in military and geopolitical environments. The article also emphasized that AI is important not only to "scientists" and "scholars" but that it is becoming a force shaping war and even education.

On May 27, Europol announced a coordinated effort focusing on the online radicalization of minors online by finding thousands of links directing them to jihadi and violent extremist and terrorist propaganda. According to the announcement, terrorist organizations and their online supporters are "tailoring their message and investing in new technologies and platforms to manipulate and reach out to minors." Emphasizing the use of AI, it said that the "content, short videos, memes, and other visual formats" are "carefully stylized to appeal to minors and families that may be susceptible to extremist manipulation" and that it also "incorporate[s] gaming elements with terrorist audio and visual material."

Among the recently released AI-generated animations distributed online by supporters of jihadi organizations include a series of videos created by a pro-Hizbullah and pro-"resistance" video producer in the UK who releases them on his Instagram account, where he has 182,000 followers and views of his videos have topped 20 million.

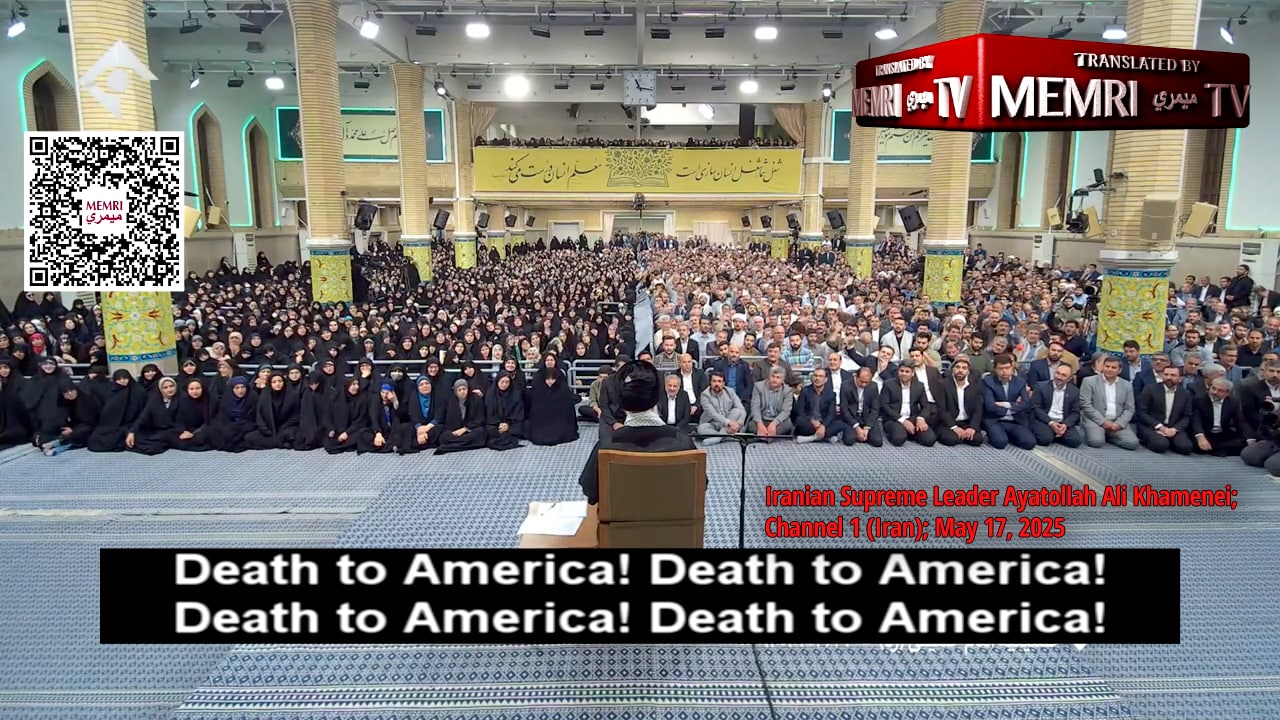

One of his online followers spreading his videos was identified as the person chanting "Death to America" at a Dearborn, Michigan rally last year who also happens to be a teacher at private Islamic schools there.

Other AI-generated animations created and distributed online by jihadi groups are videos glorifying the October 7 Hamas attacks and canonizing the group's slain leader Yahya Sinwar, along with its other dead leaders. AI videos and imagery created and shared by jihadis deliver threats to the U.S. and show jihadi armies conquering enemy territories.

All of this is part of their efforts to indoctrinate children. AI-generated images and videos are being used to incite their target audiences to jihad and martyrdom, to emulate fighters even to the death, to carry out assassinations, and to commit arson – much like children playing violent video games.

Another group using AI is Hizbullah. Its media outlets frequently have discussions about AI's importance. On October 25, 2023, two weeks after the October 7 Hamas attack, its affiliate Mayadeen Network posted on its website an AI animated music video warning that Israeli and U.S. aggression would spark a land, air, and sea invasion prompting Israelis to abandon the country as it is overrun by its gunmen on motorcycles and massively attacked with missiles.

Pro-Hamas social media accounts have used AI to celebrate October 7 and indoctrinate young audiences. Cartoon Filistin-Palestinian Animations, a Telegram channel specializing in animated productions, published an AI-generated video showing Palestinian fighters and civilians manufacturing IEDs and carrying out attacks, including arson attacks using explosive balloons, as Israeli communities go up in flames.

One more example is a AI-generated animated video for children glorifying Hamas military spokesman Abu Obeida as a role model for Muslim children, posted by a Telegram channel affiliated with the East Turkestan Islamic Party (formerly the Turkestan Islamic Party, TIP) in early March 2025.

Additionally, an AI-generated video depicting a take on the last moments of Hamas leader Yahya Sinwar was produced and aired after his death by the Turkish state-run TRT Network. In it, an AI-generated Sinwar figure "give[s] the details of [his] last battle" and how he "patrolled the streets, looking to hunt down Israeli soldiers."

Iran-backed Shi'ite militias linked to designated terror organizations are also integrating AI-generated imagery in their propaganda efforts. On April 4, 2025, Sabereen News, an Iraq-based Telegram channel supporting all "resistance" organizations, published a series of OpenAI-generated images in the style of the Ghibli Japanese anime studio, aimed at children and young people. They depicted soldiers with sleeve patches resembling the emblems of Ansar Allah (the Houthis) in Yemen, Hizbullah in Lebanon, and Hamas's military wing, the Al-Qassam Brigades.

The channel also released AI-generated images of U.S. President Donald Trump humiliated and defeated by the Houthis, in front of the wreckage of a plane pierced by a giant jambiya, the traditional Yemeni dagger. Another channel, affiliated with Iran-backed militias in Iraq, published an AI poster showing the Houthi military spokesman standing triumphantly over a defeated, submissive Trump, with his foot on his neck. The Houthis also produced AI generated videos of attacks on Israel including one from October that included drones hitting Tel-Aviv.

Jihadi groups in Syria too are using AI to reach the next generation. In a nod to its young online supporters, a year before it overthrew the Syrian government, Hay'at Tahrir Al-Sham (HTS), formerly the Syrian branch of Al-Qaeda and now in charge of the country, posted, in December 2023, AI-generated images of its forces taking Aleppo.

Although AI companies have promised to integrate strong safeguards for children into their technology, this does not appear to be happening. Also highlighting the danger of AI to youth and how easy they can be radicalized by it, in June the American Psychological Association issued a health advisory warning they are in a critical period for brain development. It urged all stakeholders to "ensure that youth safety is considered relatively early in the evolution of AI" so that the "same harmful mistakes that were made with social media" are not repeated.

The companies must recognize that terrorist groups will benefit from this technology; they bear the responsibility to establish teams and develop algorithms for ongoing scrutiny of their products for this activity and to set industry standards for monitoring and blocking this use of them. Otherwise, a new generation will be inspired, recruited, and radicalized through AI, and aim to attack the U.S. and same cities where these companies are headquartered.

*Steven Stalinsky is Executive Director of MEMRI.

Latest Posts